sparse double descent where network pruning aggravates overfitting

a brief introduction for the ICML paper of sparse double descent

Here I share our new work on network pruning “Sparse Double Descent: Where Network Pruning Aggravates Overfitting”. This work was mainly inspired by recent studies on model over-parameterization and lottery tickets hypothesis, where we explored and analyzed the generalization performance of sparse neural networks. Main conclusion: The double descent phenomenon exists in sparse neural networks-as sparsity increases, the test accuracy of the model will first decrease, then increase, and finally decrease again.

Motivation

Machine learning models are widely believed to have difficulty minimizing both bias and variance at once. Thus finding the most appropriate model requires balancing these two factors. Here shows the traditional bias-variance tradeoff curve: as model capacity increases, the training error decreases, while the test error first decreases and then increases.

However, in deep learning practice, large models often perform better than smaller models, despite traditional belief that too many parameters leads to overfitting. Studies have found that the relationship between test error and model capacity is not a U-shaped tradeoff, but rather a double descent curve, that is, as the number of model parameters increases, the test error first decreases, then increases, and then decreases again

That is to say, an over-parameterized neural network, instead of severely overfitting, may have better generalization performance! This contradicts the traditional belief directly.

The lottery tickets hypothesis

From this point of view, in an over-parameterized neural network, only a relatively small number of parameters play a role in optimization and generalization, while the remaining parameters only serve as redundant backups. The performance of models won’t be greatly affected when redundant parameters were pruned.

It appears that we can safely prune redundant parameters from our models without worrying about adverse effects. Moreover, pruned neural networks are believed to have better generalization properties according to Occam’s razor principle

In light of the double descent phenomenon, we wonder: Are the parameters removed by pruning completely redundant?

We investigate this question following the deep double descent setting

Sparse double descent

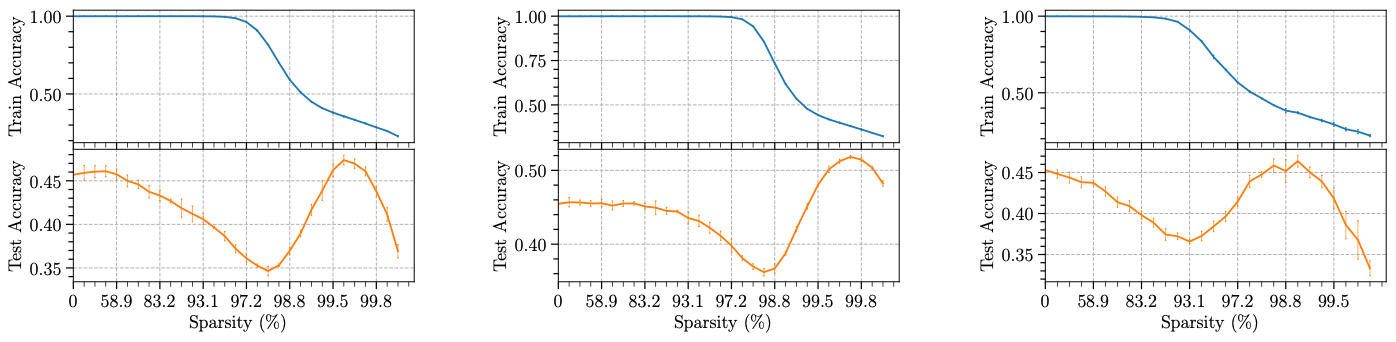

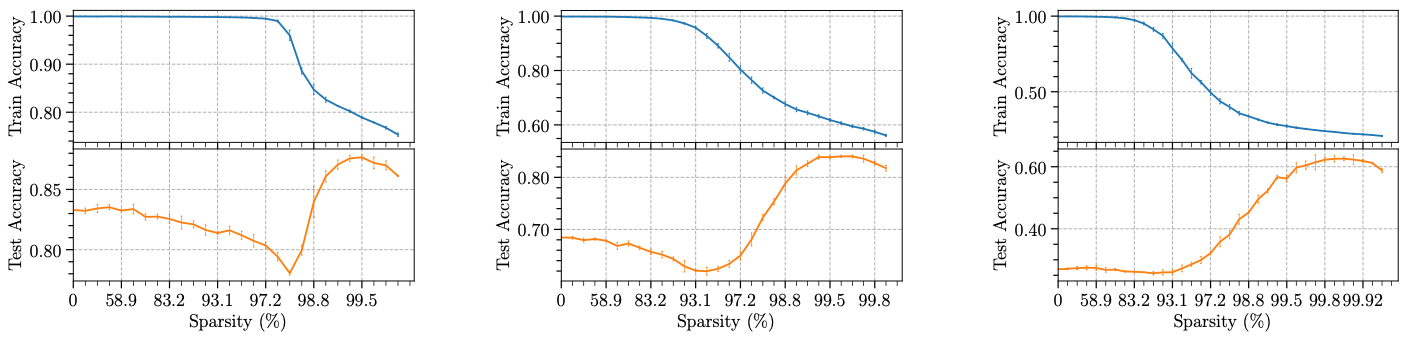

Experiments revealed that the “redundant” parameters in the network are not completely redundant. When increasing model sparsity through iterative pruning, even if the model training accuracy has not been affected, its test accuracy may decline significantly, where the model overfits noise. If the sparsity of the model is further increased, it can be found that after passing the interpolation threshold, the training accuracy of the model begins to drop rapidly, and the test accuracy begins to increase, and the robustness of the model to noise is gradually improved. If you continue to reduce the parameters of the model after the test accuracy rate reaches its highest point, the training and testing accuracy of the model decreases at the same time, where the model gradually loses its learning ability.

In addition, we also found that using different criteria for pruning, the resulting models have different model capacity/complexity even with the same amount of parameters. For example, for the interpolating threshold, the model pruned using magnitude-based method has higher sparsity, while the model pruned with random pruning corresponds to lower sparsity. It shows that random pruning damages the representative capability of the model more severely, and fewer parameters can be pruned to achieve the same effect.

While most of our experiments retrained the lottery ticket hypothesis, several other different approaches were also applied. Interestingly, a significant double drop can be observed even with finetuning after pruning. It can be seen that the phenomenon of sparse double descent is not limited to training a sparse network from initialization.

We also adjusted the label noise ratio in our experiments. Similar to the deep double descent, increasing the label noise ratio will make the starting point of the model training accuracy drop to move towards a higher model capacity (ie, lower sparsity). On the other hand, the higher label noise ratio, the more parameters need to be pruned to avoid overfitting.

Why sparse double descent happens?

Here we mainly investigate two possible explanations.

One is the Minima Flatness Hypothesis. Some papers point out that pruning can add perturbations to the model, which make it easier for the model to converge to a flat minimum

So, can minima flatness explain the sparse double descent?

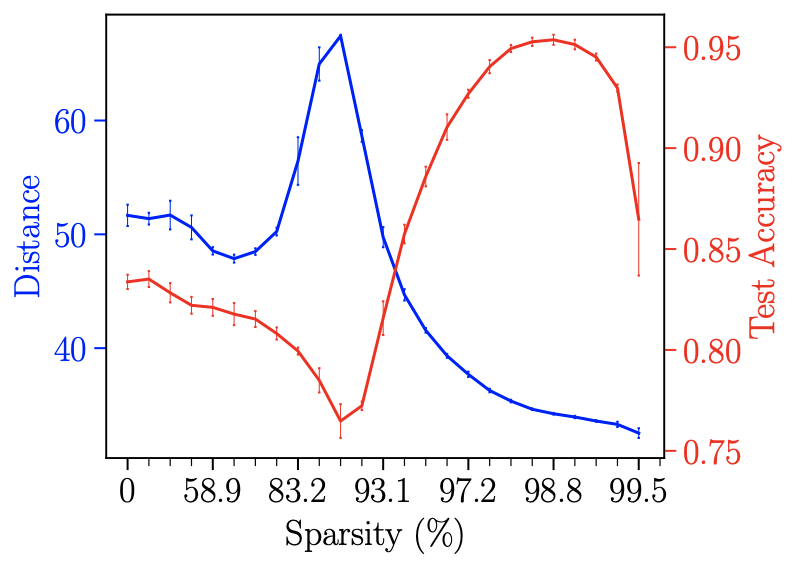

We visualized the loss as shown in the figure, and indirectly compared the flatness of the minima of the model under different sparsity.

Unfortunately, as sparsity increases, the loss curve becomes sharper. There is no evident correlation between the minima flatness and the test accuracy.

The other is the Learning Distance Hypothesis.

It has been proved theoretically that the complexity of deep learning models is closely related to the l2 distance of the parameters from the initialization (learning distance)

So, may changes in learning distance reflect the trend of double decline?

As can be seen from the figure, when the accuracy rate decreases, the overall learning distance tends to increase, and the highest point corresponds to the lowest point of the accuracy rate; when the accuracy rate increases, the learning distance also decreases accordingly. The change in learning distance is generally in line with the trend of sparse double descent (although when the test accuracy declines for the second time, it is difficult for the learning distance to rise again due to too few trainable parameters).

Relation to the lottery ticket hypothesis

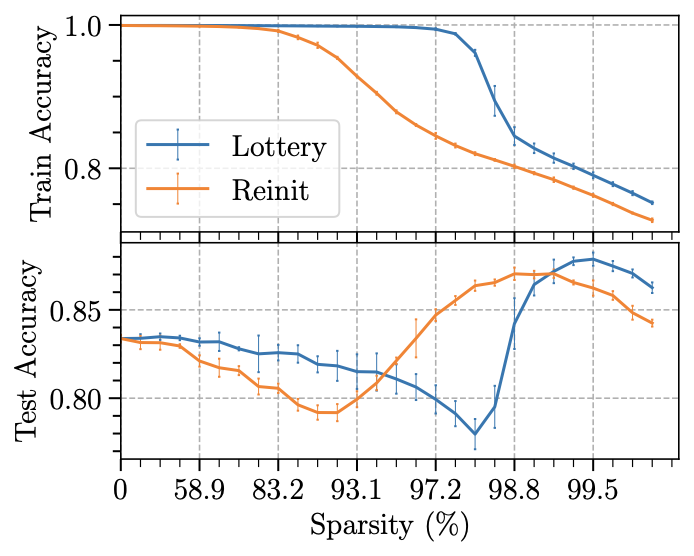

We also conduct experiments comparing winning tickets with re-random initialization. Interestingly, the initialization of the lottery ticket hypothesis does not always outperform the re-initialization of the network in the double-descent scenario.

It can be seen from the figure that the result of Reinit is shifted to the left compared to Lottery as a whole, that is to say, the Reinit method is inferior to Lottery in terms of retaining the expressive ability of the model. This also validates the lottery ticket hypothesis: even if the structure of the model is the same, the performance of the model may be very different when trained from different initializations.

Conclusion

In the process of doing this research, we observed some amazing and counterintuitive experimental phenomena and attempted to interpret them analytically. However, the existing theoretical work has not been able to fully explain the reasons for the existence of these phenomena.

For example, when the training accuracy is close to 100%, the test accuracy will gradually decrease with pruning. Why does the model not forget the complex features in the data at this time, but overfit the noise more seriously? We also observed that the learning distance of the model will increase first and then decrease with the increase of sparsity. Why does pruning cause such a change in the learning distance of the model? And the double descent phenomenon of deep learning models often needs to add label noise to the input to be observed

There are still many questions that remain unanswered. We are also now working on a new theoretical work that will shed light on one or more of these issues. I hope that the fog can be cleared as soon as possible to find out the essential reasons behind this phenomenon.

Paper: Sparse Double Descent: Where Network Pruning Aggravates Overfitting